As artificial intelligence (AI) continues to evolve and permeate various aspects of society, the ethical implications of these technologies are more critical than ever.

In 2021, I had the opportunity to discuss these issues with Mira Lane, Principal PM for Ethics & Society at Microsoft. Our conversation, though two years old, is more relevant today than ever, as the ethical frameworks we talked about have only grown in importance as AI systems become more ubiquitous and powerful. This piece reflects on the insights from that discussion and explores how intentional ethical frameworks can shape both technology and society for the better.

The Importance of Data Provenance in Ethical AI

One of the critical challenges in developing ethical AI is ensuring the provenance of the data used to train these systems. AI learns from data, and if that data is flawed or biased, the system can inadvertently perpetuate those biases, leading to negative societal impacts. In our conversation, Mira and I discussed how understanding the origins of training data is not only essential for accuracy but also for ensuring accountability.

From my perspective, ensuring data provenance goes beyond just mitigating risk — it’s about designing systems that reflect the diversity of the world we live in. When AI systems are built on ethically sourced, representative data, they are more likely to produce outcomes that serve everyone fairly. Ethical AI is not just a technical challenge; it’s a societal responsibility that requires careful attention to the origins of data and the people it impacts.

Designing Environments That Shape Behavior at Scale

As someone deeply rooted in behavior design and persuasive technology, I’ve always been fascinated by how the environments we create — whether physical or digital — can shape behavior at scale. AI systems are no different; they are essentially behavioral tools that influence how people interact with technology and with one another.

When we design AI, we are also designing the conditions for certain behaviors to emerge. If we want AI to contribute to a more just, peaceful, and equitable world, we need to be intentional about how we design these systems. It’s not just about avoiding harm — it’s about building AI that fosters cooperation, empathy, and inclusivity.

In my work at the Peace Innovation Lab at Stanford, we’ve focused on how technology can be a catalyst for relational health — the quality of our relationships with one another and our communities. AI has the power to either enhance or degrade these relationships, depending on how it’s designed. The choices we make in building these systems are not just technical — they are moral choices that will shape society for years to come.

Microsoft’s Harms Modeling Framework: A Blueprint for Ethical Design

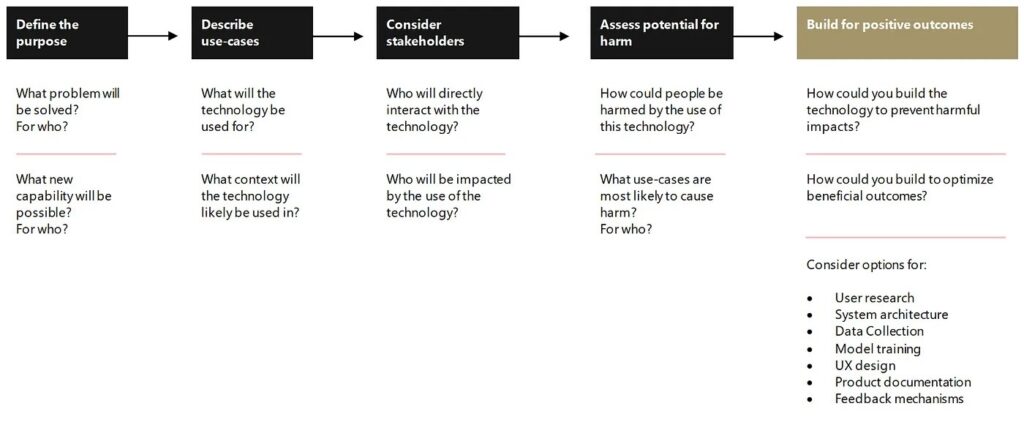

Microsoft Harms Modeling Framework

One of the most compelling tools we discussed was Microsoft’s Harms Modeling Framework, which provides a structured way to anticipate and mitigate potential harms that could arise from AI systems. By breaking down risks across various dimensions — physical, emotional, and societal — this framework helps ensure that ethical considerations are built into the development process from the very beginning.

What I find most powerful about this framework is that it encourages developers to think about how their systems will impact people on a behavioral and societal level. It’s not just about preventing bias or avoiding negative outcomes; it’s about designing AI systems that actively promote positive behaviors and social outcomes.

For me, the Harms Modeling Framework aligns closely with the principles of behavior design. Whether we are designing a physical space, a digital tool, or an AI system, we need to ask: How will this environment shape behavior? Will it encourage trust, fairness, and cooperation? If we want technology to serve as a force for good, these are the kinds of questions that need to be at the forefront of the design process.

Ethics as a Tool for Positive Social Outcomes

At its core, the conversation around tech ethics should not be limited to mitigating harm. Ethics is a powerful tool for shaping the kind of world we want to live in. When ethical frameworks are embedded into the development process, they create the conditions for good outcomes — outcomes that not only prevent harm but also actively contribute to the well-being of society.

In the realm of AI, this means designing systems that promote relational health, social cohesion, and equity. It means moving beyond compliance with regulations and thinking about how technology can be used to foster a more peaceful and just world.

As I continue to explore these intersections of technology, ethics, and peace, my focus is on how we can intentionally design systems that reflect the values we want to see in the world. If we can do that — whether through frameworks like Microsoft’s Harms Modeling or other approaches — then AI and other technologies can truly become tools for positive transformation.

The Role of Behavior Design in Shaping the Future of AI

Behavior design teaches us that the built environment — whether physical or digital — has the power to influence behavior at scale. This principle is critical when thinking about the future of AI. If we want AI to contribute to a world that is more just, peaceful, and equitable, we must intentionally design these systems with those outcomes in mind.

This is the power of persuasive technology — it allows us to design environments that facilitate the emergence of ethical behaviors, cooperation, and trust. When we design AI systems with these values in mind, we are not just building technology; we are building the conditions for a more peaceful and equitable world.

— –

To learn more/resources:

My interview with Mira Lane: https://youtu.be/HwhG4jtU15o

Microsoft Responsible Innovation: A Best Practices Toolkit https://learn.microsoft.com/en-us/azure/architecture/guide/responsible-innovation/

Microsoft Harms Modeling Framework https://learn.microsoft.com/en-us/azure/architecture/guide/responsible-innovation/#harms-modeling